Ducklake

Ducklake allows you to store massive amounts of data in S3, but still query it efficiently using DuckDB in natural SQL language.

Getting started

Prerequisites:

- A workspace storage configured

- A Postgres or MySQL resource (Optional for superusers)

Superusers can use the Windmill database as a catalog for Ducklake with no additional configuration.

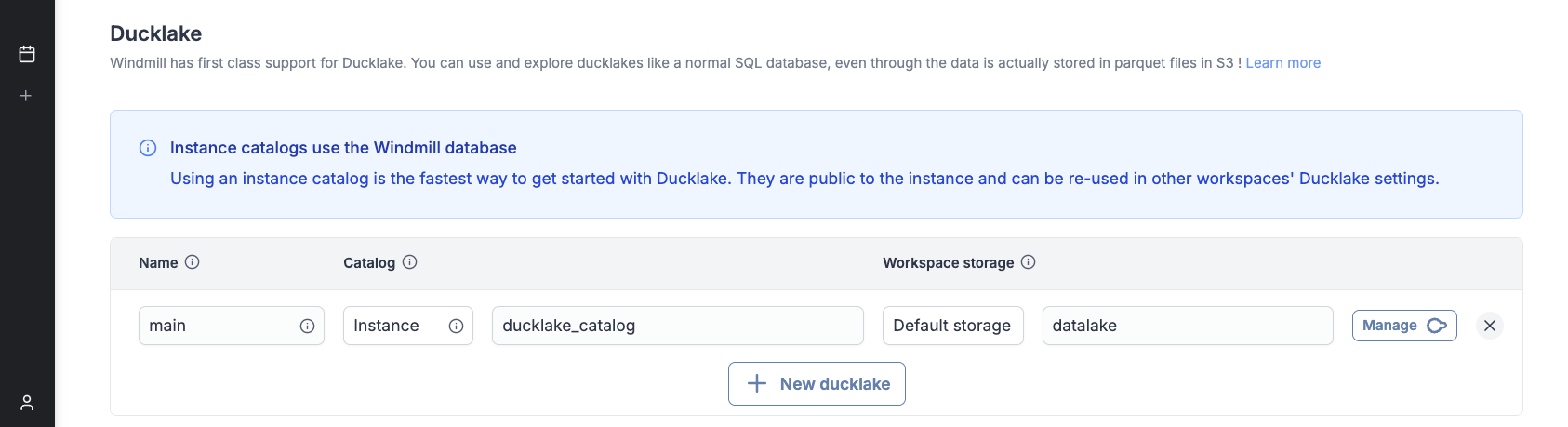

Go to workspace settings -> Object storage (S3) and configure a Ducklake :

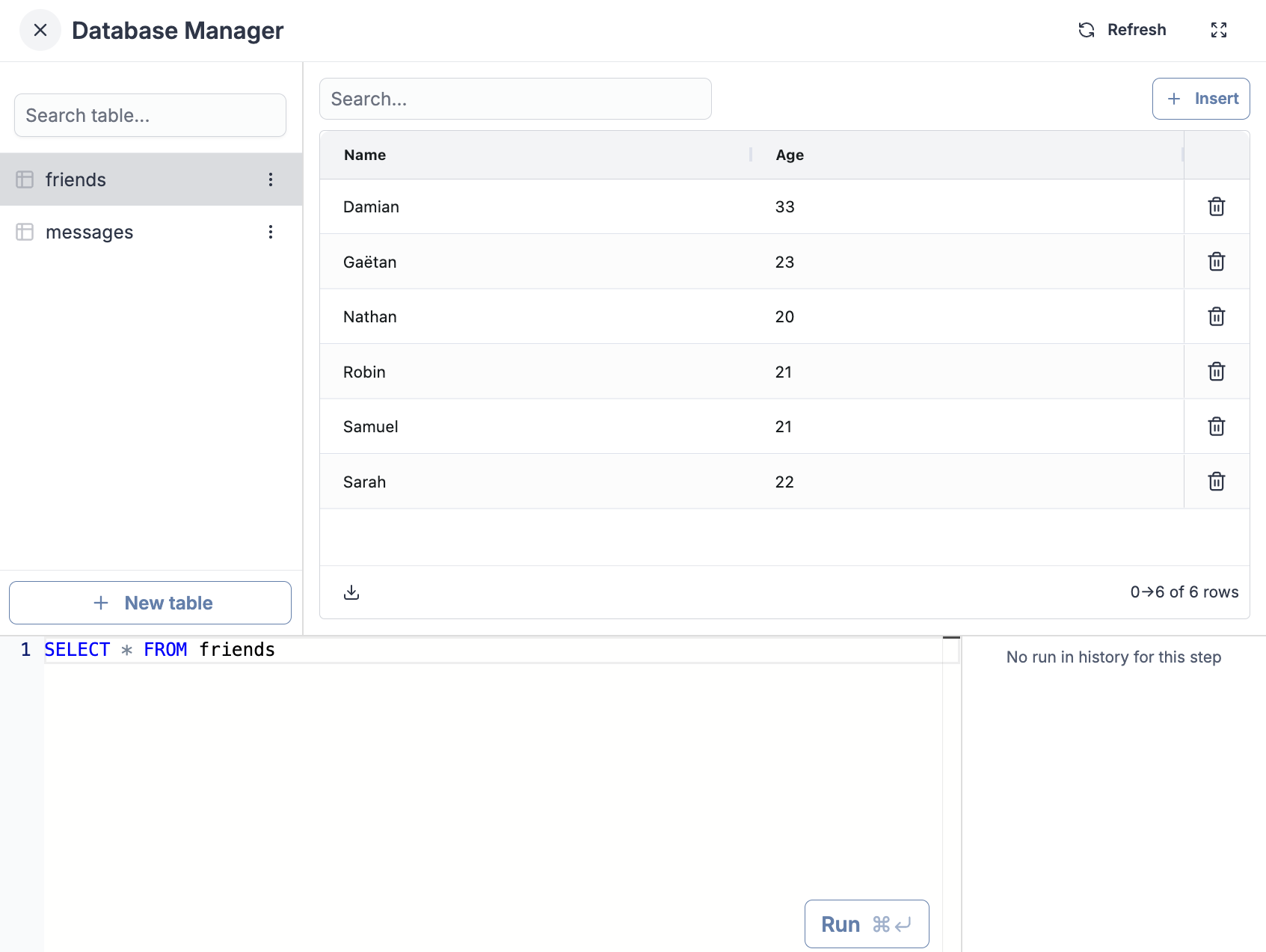

Clicking the "Explore" button will open the database manager. Your ducklake behaves like any other database : you can perform all CRUD operations through the UI or with the SQL Repl. You can also create and delete new tables.

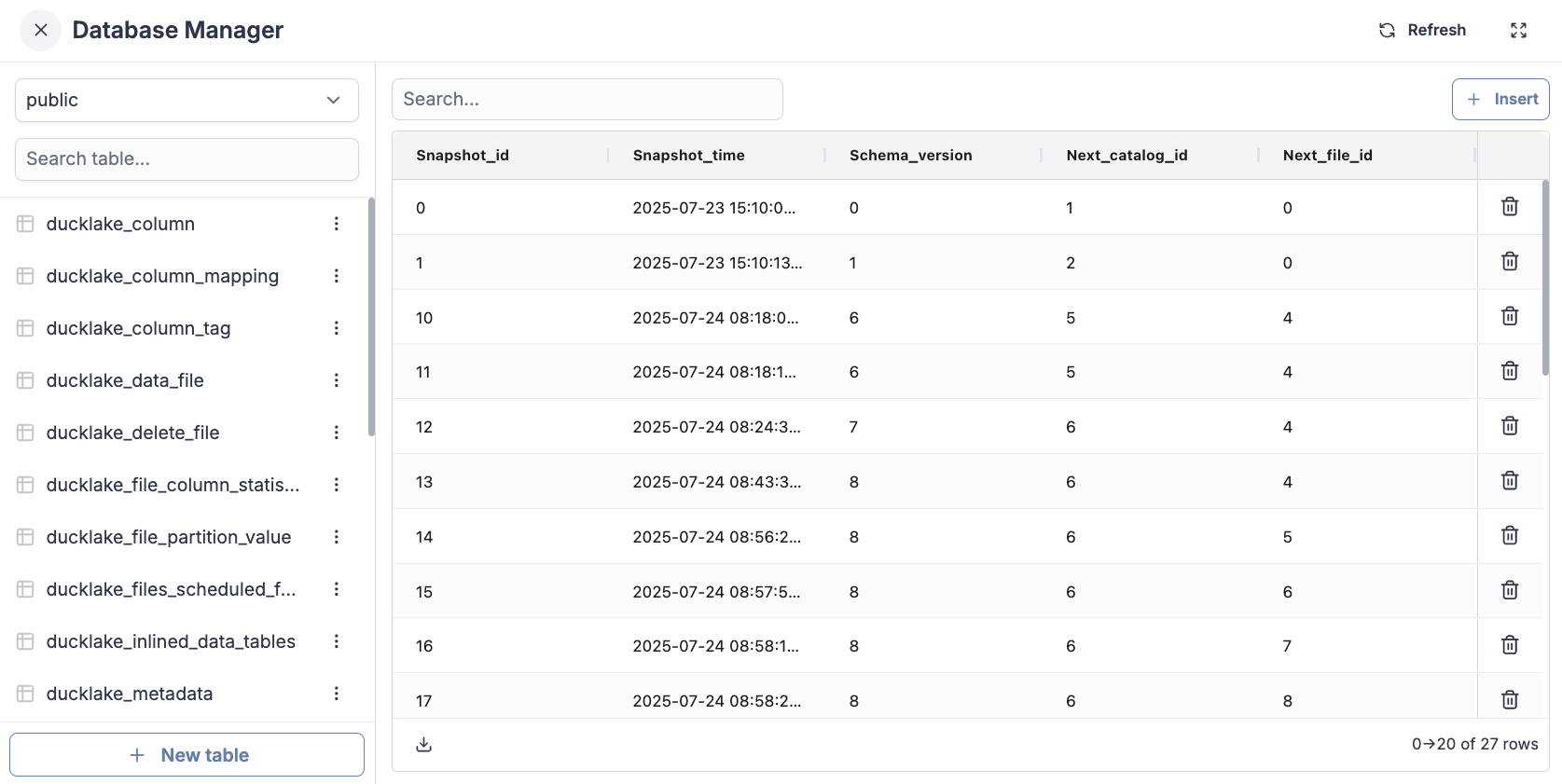

If you explore your catalog database, you will see that Ducklake created some tables for you. The catalog database corresponds to the resource you specified as the catalog database in your Ducklake configuration. You can visualize its content from the resources page in your workspace. Note that it is currently not possible to visualize the content of a catalog of type Instance.

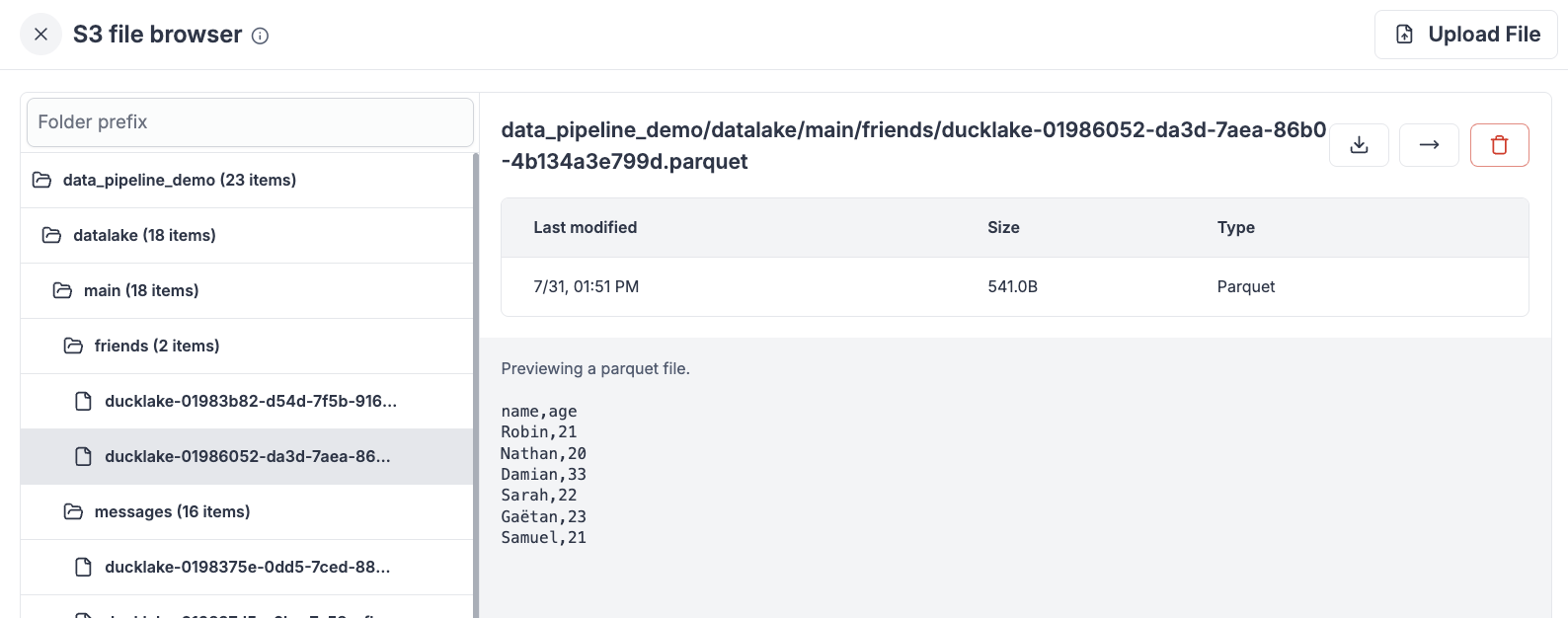

These metadata tables store information about your data and where it is located in S3. If you go to your workspace storage settings, you can explore your selected workspace storage at the configured location and see your tables and their contents :

Using Ducklake in DuckDB scripts

Ducklakes are referenced in a URI style : ducklake://name, and can be accessed in DuckDB scripts using the ATTACH syntax.

For example, if you named your Ducklake my_ducklake, you can attach it like this:

ATTACH 'ducklake://my_ducklake' AS dl;

USE dl;

Naming your ducklake main will allow you to attach it with the shorthand syntax :

ATTACH 'ducklake' AS dl;

The original syntax ATTACH 'ducklake:postgres:connection_string' does not benefit from Windmill's integration.

You can use the Ducklake button in the editor bar for convenience, which will write the ATTACH and USE statement for you.

In the example below, we pass a list of messages with positive, neutral or negative sentiment.

This list might come from a Python script which queries new reviews from the Google My Business API,

and sends them to an LLM to determine their sentiment.

The messages are then inserted into a Ducklake table, which effectively creates a new parquet file.

-- $messages (json[])

ATTACH 'ducklake://main' AS dl;

USE dl;

CREATE TABLE IF NOT EXISTS messages (

content STRING NOT NULL,

author STRING NOT NULL,

date STRING NOT NULL,

sentiment STRING

);

CREATE TEMP TABLE new_messages AS

SELECT

value->>'content' AS content,

value->>'author' AS author,

value->>'date' AS date,

value->>'sentiment' AS sentiment

FROM json_each($messages);

INSERT INTO messages

SELECT * FROM new_messages;