Dedicated workers / High throughput

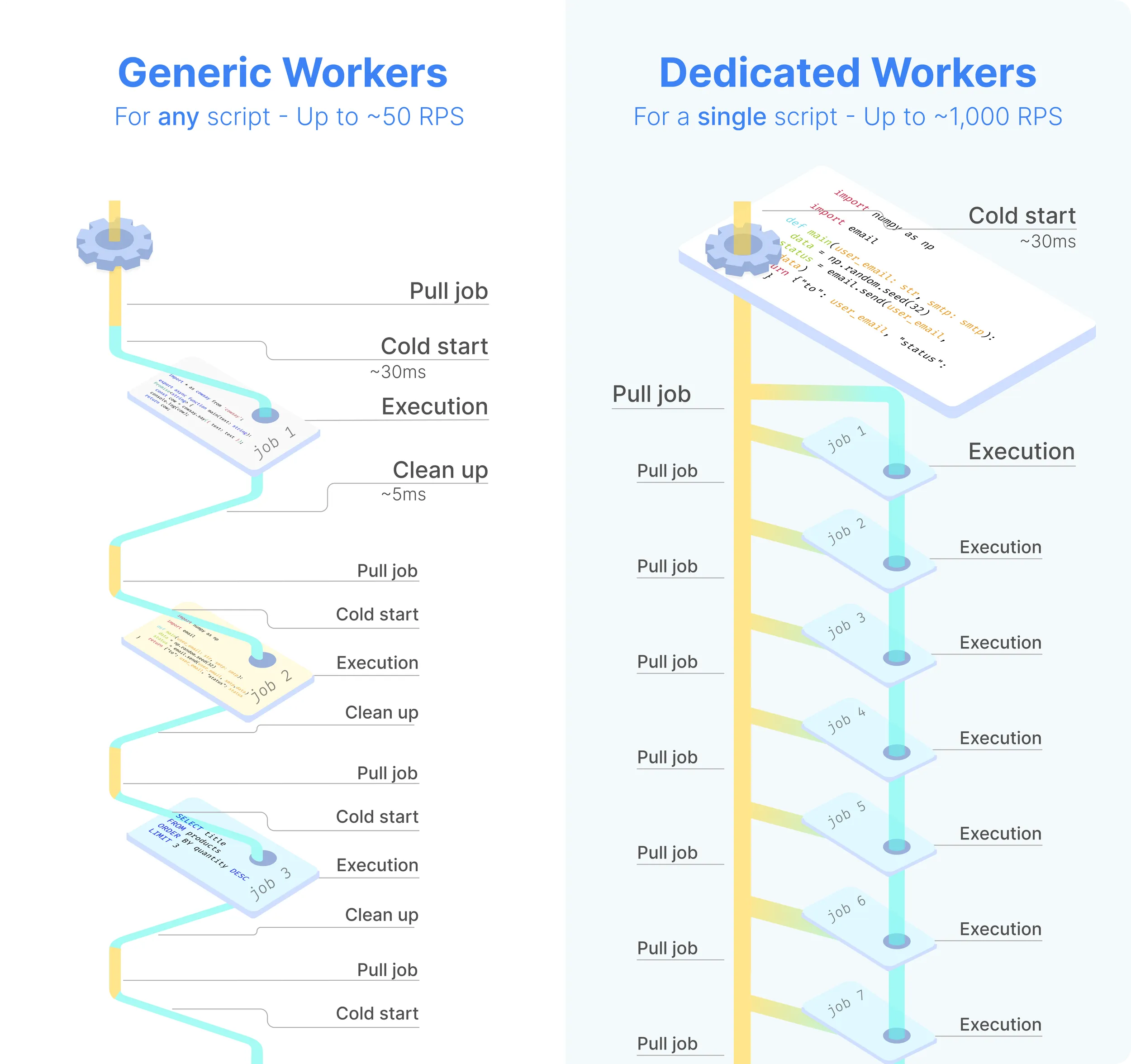

Dedicated Workers are workers that are dedicated to a particular script. They are able to execute any job that target this script much faster than normal workers at the expense of being capable to only execute that one script. They are as fast as running the same logic in a forloop, but keep the benefit of showing separate jobs per execution.

Workers serve as the backbone of Windmill. Workers are autonomous processes that run one script at a time using the full cpu and memory available to them.

To put it simply, workers are responsible for executing code in Windmill. A single normal worker in Windmill is capable of handling up to 26 million tasks a month, with each task taking roughly 100ms. You can easily scale the number of workers horizontally without incurring extra overhead.

Typically, workers fetch jobs from the job queue based on their scheduled_for datetime, provided it's in the past. Once a worker retrieves a job, it immediately changes its status to "running", processes it, streams its logs, and upon completion, archives it in the database as a "finished job". Both the final outcome and the logs are preserved indefinitely.

This simple process allows one to reliably count on a small number of workers for very different jobs. However, there is a latency between each task since the worker must perform a clean-up and a cold start between each execution (to be able to handle successive jobs but from completely different scripts). This latency amounts to around a dozen milliseconds, which can be crucial in the execution of certain priority jobs:

Dedicated workers allow you to remove completely the cold start for selected scripts. They are workers that are dedicated to a particular script. They are able to execute any job that target this script much faster than normal workers at the expense of being capable to only execute that one script. They are as fast as running the same logic in a while-loop processing requests, that is how they are implemented actually, but keep the benefit of showing separate jobs per execution.

Dedicated workers / High throughput are a Cloud plans & Self-Hosted Enterprise feature.

Dedicated workers work with TypeScript and Python scripts, they have the highest cold starts. Queries to databases such as PostgreSQL, MySQL, BigQuery, or bash and go scripts do not suffer from any cold starts and hence have the same benefits already without any compexity.

The scripts can be used within flows.

Dedicated workers are faster than AWS lambda: https://www.windmill.dev/docs/misc/benchmarks/aws_lambda.

How to assign dedicated workers to a script

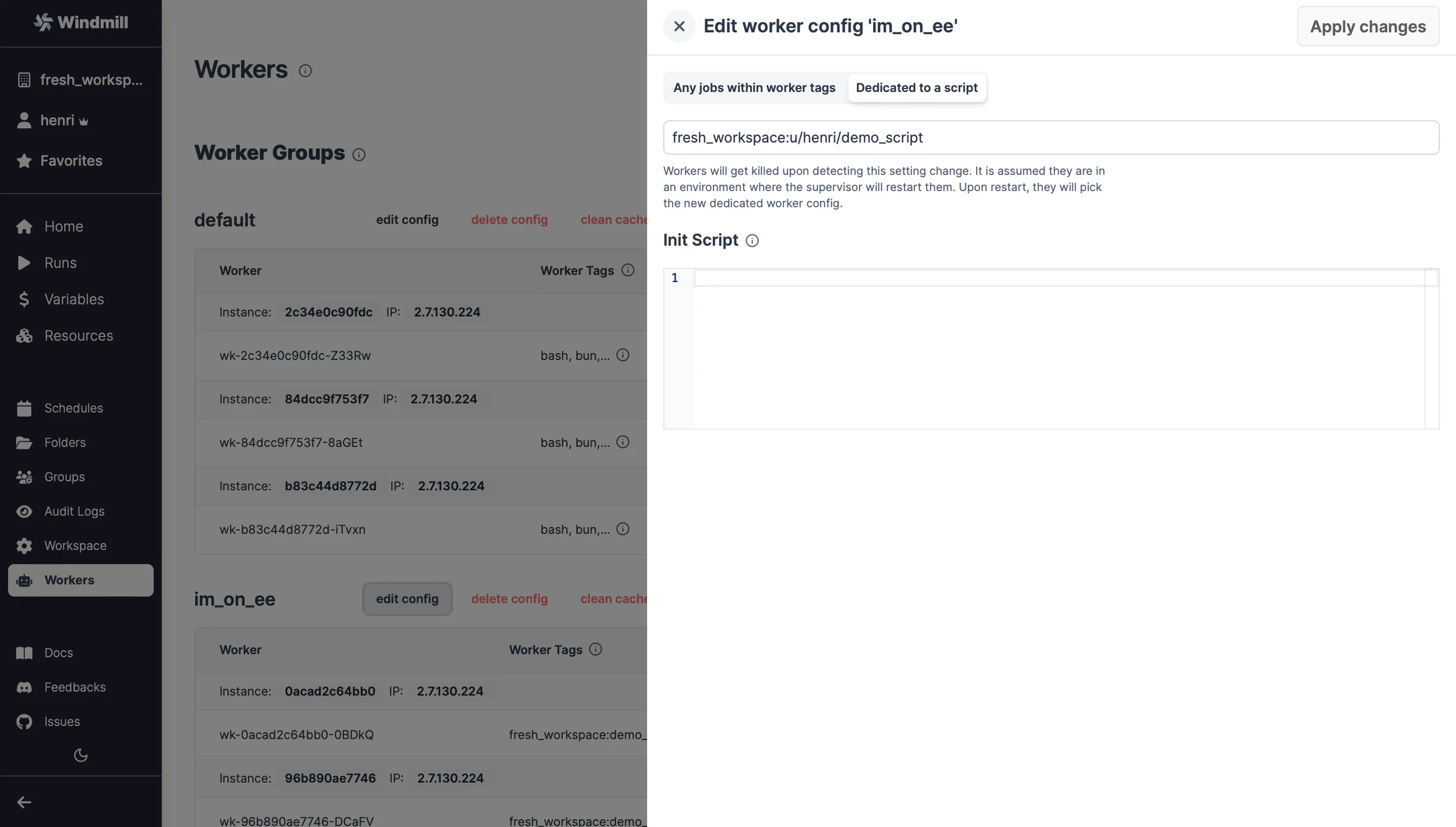

From Windmill UI's Workers page:

- "Edit config" of a worker group and enter script's workspace & path as

worker:path.

The worker group will restart (assuming the pods/restart are set to restart automatically) and will now wait for step 2. below to happen:

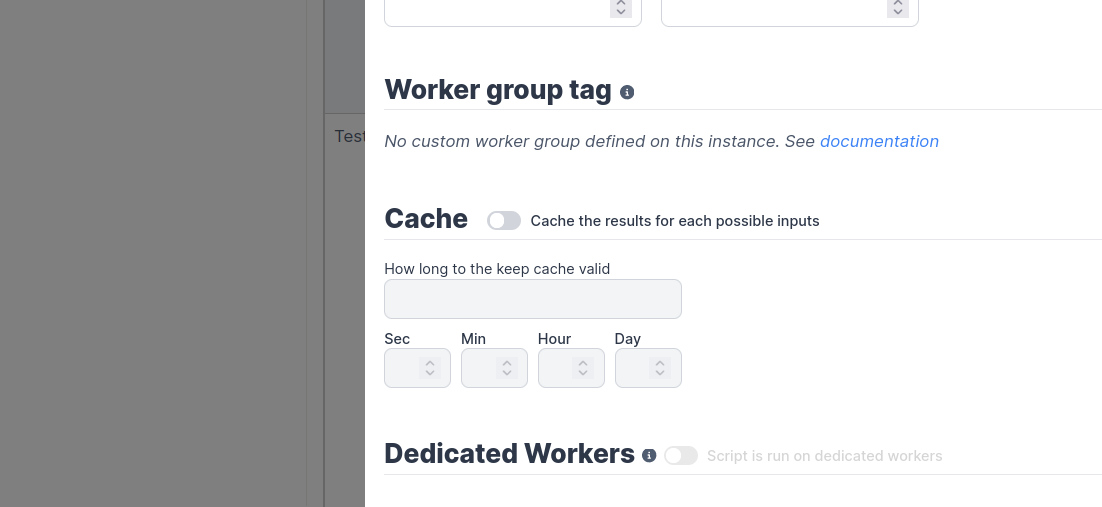

- Toggle the "Dedicated Workers" option for that script in the script Settings:

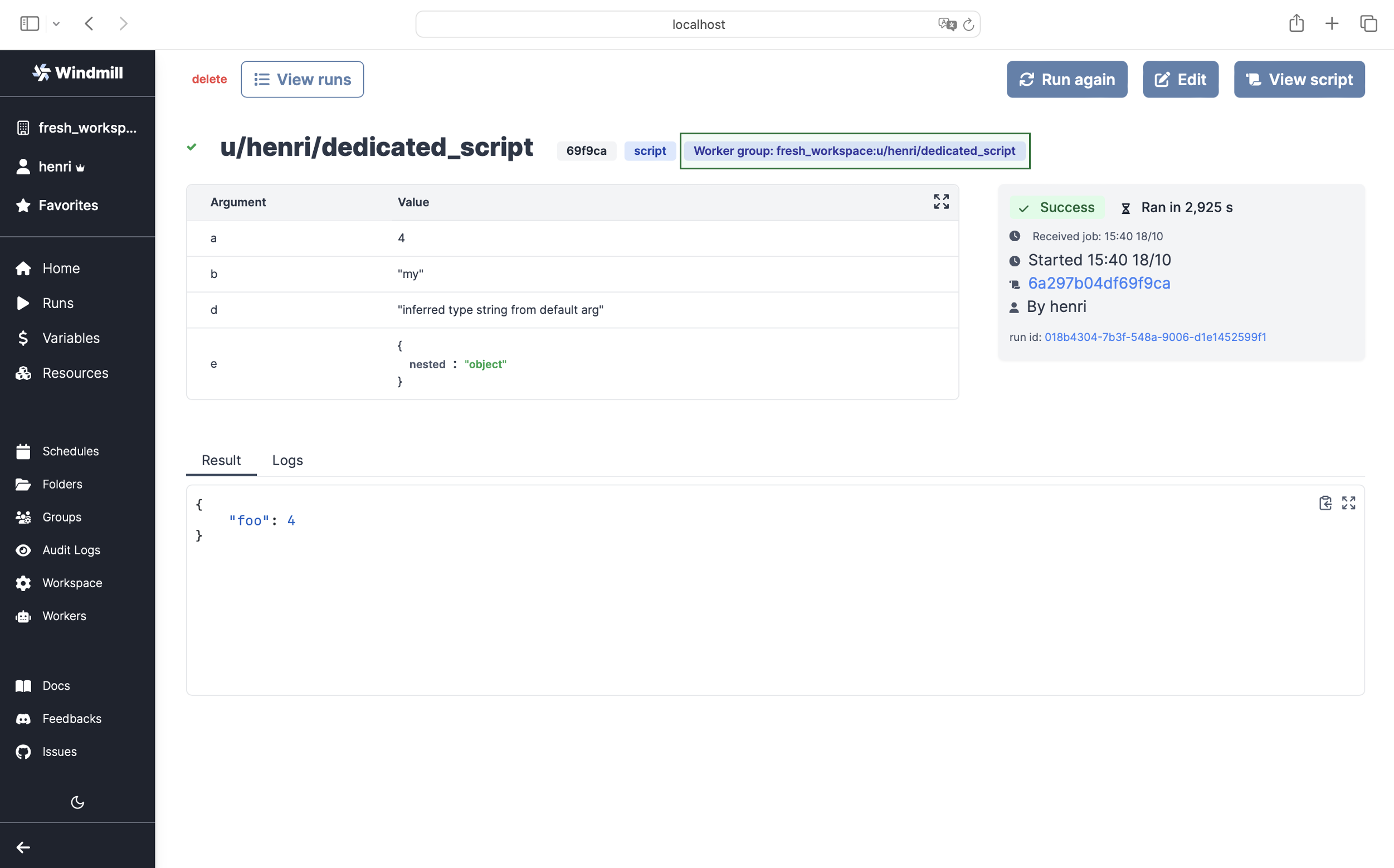

Each run will have a Worker group tag assigned to it. Worker group tags allow to assign custom worker groups to scripts and flows in Windmill for efficient execution on different machines with varying specifications.

Dedicated workers for flows

Dedicated workers can also be assigned to a flow. In that case, the dedicated worker will start one runner for each flow step that supports it (TypeScript and Python), eliminating cold start between each execution. Other steps will be run on the same worker but without any optimization.

To enable it, the process is the same as for scripts, but the "Dedicated Workers" option for that flow has to be enabled in the flow Settings.