6 posts tagged with "Scripts"

View All TagsPowerShell scripts now support private repositories and array/object parameters.

New features

- Support for private repository access

- Parameter support for arrays and objects (PSCustomObject)

Visualize your data flow and automatically track where your assets are used

New features

- Assets are automatically parsed from your code

- Read / Write mode is infered from code context

- Add S3 file assets easily through the editor bar

- Flow graph displays asset nodes as input or output depending on the infered read / write mode

- You can manually select the Read / Write mode for an asset when it is ambiguous in the code

- Passing a resource or an s3 file object as input of a flow will display it as an input asset in the run preview

- Assets page to see where your assets are used

- Explore database / S3 Object Preview button

- Unified URI syntax (res://path/to/res, s3://storage/path/to/file.csv, etc)

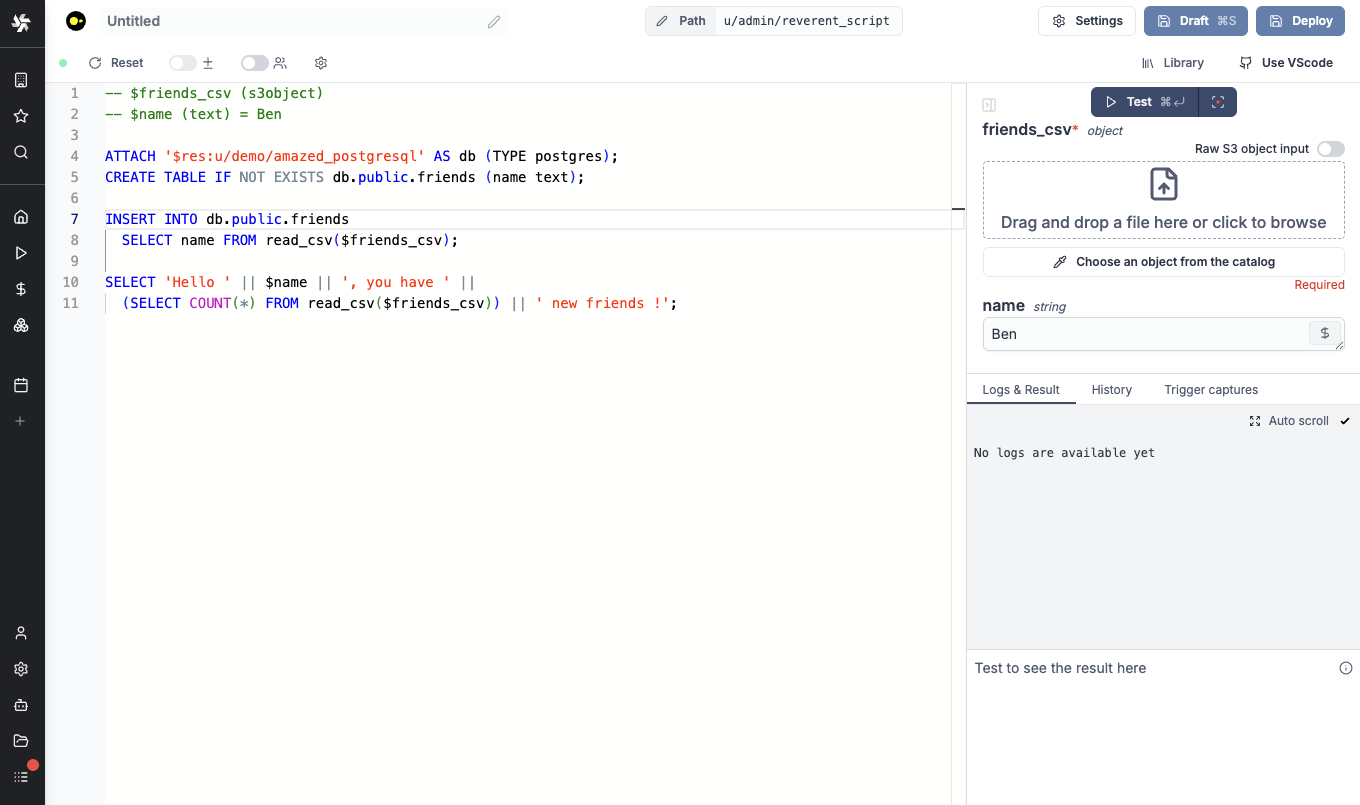

You can run DuckDB scripts in-memory, with access to S3 objects and other database resources. You no longer need a scripting language for your ETL pipelines with DuckDB/Polars, you can do it entirely in SQL

New features

- S3 object integration

- Attach to BigQuery, Postgres and MySQL database resources with all CRUD operations

You can stream the results of a large SQL query to an S3 file in your workspace storages

New features

- Supported formats: json (default), csv, parquet

- Set object key prefix

- Select a secondary storage

It is now possible to use pin annotation to specify dependency you want to be associated with the import. In contrast with "#requirements:" syntax, it is applied import-wise instead of script-wise.

New features

- Python Pins

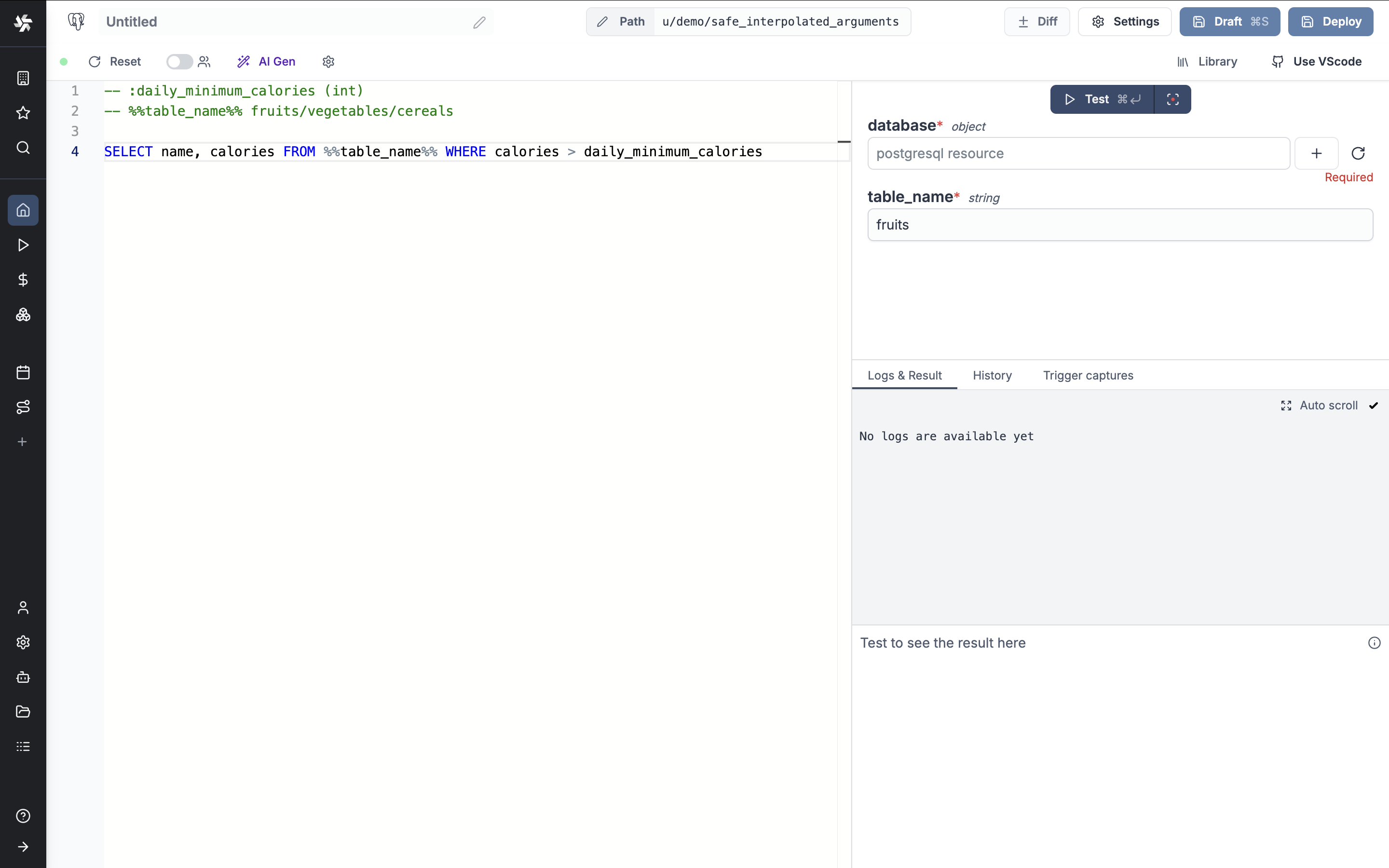

Backend schema validation and safe interpolated arguments for SQL queries.

New features

- Backend schema validation for scripts using the schema_validation annotation.

- Safe interpolated arguments for SQL queries using %%parameter%% syntax.

- Protection against SQL injections with strict validation rules for interpolated parameters.