3 posts tagged with "Streaming"

View All TagsIntegrate AI agents directly into your Windmill flows with comprehensive support for all major AI providers, multimodal capabilities, streaming responses, tool integration, and structured outputs. AI agents can process text and images, call Windmill scripts as tools, stream responses in real-time, and output structured data following JSON schemas.

New features

- Support for all major AI providers including OpenAI, Azure OpenAI, Anthropic, Mistral, DeepSeek, Google AI (Gemini), Groq, OpenRouter, Together AI, and custom AI endpoints.

- Real-time streaming responses with detailed payload types including token deltas, tool calls, tool execution, and tool results.

- Tool integration allowing AI agents to call Windmill scripts (inline, workspace, or Hub scripts) as tools for extended functionality.

- Image input support for AI analysis and processing of uploaded images (requires S3 object storage configuration).

- Structured output generation respecting JSON schemas for predictable, processable responses.

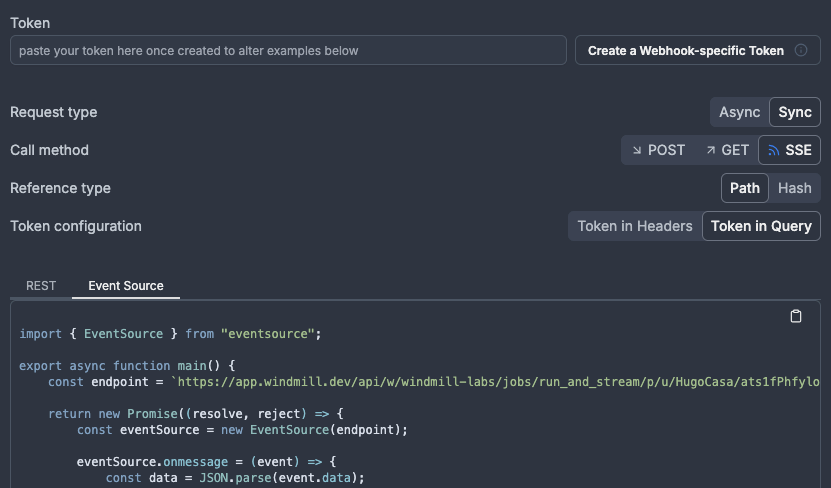

Extended streaming capabilities to flows in addition to scripts, enabling real-time streaming for AI agents and other flow steps. Added new SSE endpoints to start and listen to jobs with comprehensive streaming support.

New features

- Extended streaming support to flows - flows can now stream results when the last step returns a stream.

- New SSE stream webhooks for scripts and flows.

We introduce native result streaming in 1.518.0. Returns a stream (any AsyncGenerator or iter compatible object) as a result OR to stream text before the result is fully returned. It works with Typescript (Bun, Deno, Nativets), Python and is compatible with agent workers. If not returning the stream directly, we introduce 2 new functions on our SDK: wmill.streamResult(stream) (TS) and wmill.stream_result(stream) (Python), to do it mid-script.

It's made with LLM action in mind because many scripts nowadays interact with LLM that have streaming response that can be returned in a streaming manner as a result. We will progressively refactor all our relevant hub scripts that are compatible with streaming to leverage this new capability.

The stream only exists while the job is in the queue. Afterwards, the full stream becomes the result (or added as the field "wm_stream" if there is already a result).

New features

- return stream objects to stream directly results

- compatible with LLM sdks to stream their response as-is

- new SDK functions to stream within the job

- once job is finished, full stream becomes the result